Performance optimizing hybrid OpenFlow controller describes InMon's sFlow-RT controller. The controller makes use of the sFlow and OpenFlow standards and is optimized for real-time traffic engineering applications that managing large traffic flows, including: DDoS mitigation, ECMP load balancing, LAG load balancing, large flow marking etc.

The previous article provided an example of large flow marking using an Alcatel-Lucent OmniSwitch 6900 switch. This article discusses how to replicate the example using HP Networking switches.

At present, the following HP switch models are listed as having OpenFlow support:

HP's OpenFlow implementation supports integrated hybrid mode - provided the OpenFlow controller pushes a default low priority OpenFlow rule that matches all packets and applies the NORMAL action (i.e. instructs the switch to apply default switching / routing forwarding to the packets).

In this example, an HP 5400 zl switch is used to run a slightly modified version of the sFlow-RT controller JavaScript application described in Performance optimizing hybrid OpenFlow controller:

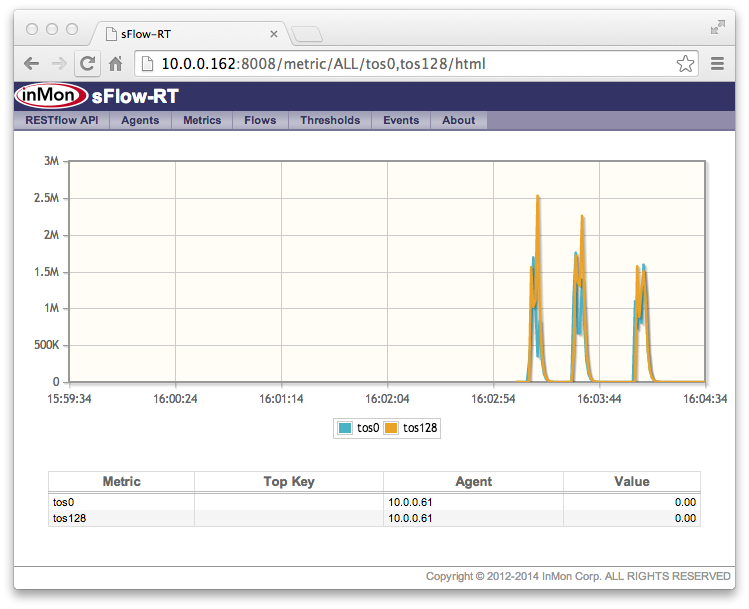

The screen capture at the top of the page shows a mixture of small flows "mice" and large flows "elephants" generated by a server connected to the HP 5406 zl switch. The graph at the bottom right shows the mixture of unmarked traffic being sent to the switch. The sFlow-RT controller receives a stream of sFlow measurements from the switch and detects each elephant flows in real-time, immediately installing an OpenFlow rule that matches the flow and instructing the switch to mark the flow by setting the IP type of service bits. The traffic upstream of the switch is shown in the top right chart and it can be clearly seen that each elephant flow has been identified and marked, while the mice have been left unmarked.

The previous article provided an example of large flow marking using an Alcatel-Lucent OmniSwitch 6900 switch. This article discusses how to replicate the example using HP Networking switches.

At present, the following HP switch models are listed as having OpenFlow support:

- FlexFabric 12900 Switch Series

- 12500 Switch Series

- FlexFabric 11900 Switch Series

- 8200 zl Switch Series

- HP FlexFabric 5930 Switch Series

- 5920 Switch Series

- 5900 Switch Series

- 5400 zl Switch Series

- 3800 Switch Series

- HP 3500 and 3500 yl Switch Series

- 2920 Switch Series

HP's OpenFlow implementation supports integrated hybrid mode - provided the OpenFlow controller pushes a default low priority OpenFlow rule that matches all packets and applies the NORMAL action (i.e. instructs the switch to apply default switching / routing forwarding to the packets).

In this example, an HP 5400 zl switch is used to run a slightly modified version of the sFlow-RT controller JavaScript application described in Performance optimizing hybrid OpenFlow controller:

// Define large flow as greater than 100Mbits/sec for 0.2 seconds or longerThe idleTimeout was increased from 2 to 20 seconds since the switch has a default Probe Interval of 10 seconds (the interval between OpenFlow counter updates). If the OpenFlow rule idleTimeout is set shorter than the Probe Interval the switch will remove the OpenFlow rule before the flow ends.

var bytes_per_second = 100000000/8;

var duration_seconds = 0.2;

var idx = 0;

setFlow('tcp',

{keys:'ipsource,ipdestination,tcpsourceport,tcpdestinationport',

value:'bytes', filter:'direction=ingress', t:duration_seconds}

);

setThreshold('elephant',

{metric:'tcp', value:bytes_per_second, byFlow:true, timeout:2,

filter:{ifspeed:[1000000000]}}

);

setEventHandler(function(evt) {

var agent = evt.agent;

var ports = ofInterfaceToPort(agent);

if(ports && ports.length == 1) {

var dpid = ports[0].dpid;

var id = "mark" + idx++;

var k = evt.flowKey.split(',');

var rule= {

priority:500, idleTimeout:20,

match:{dl_type:2048, nw_proto:6, nw_src:k[0], nw_dst:k[1],

tp_src:k[2], tp_dst:k[3]},

actions:["set_nw_tos=128","output=normal"]

};

setOfRule(dpid,id,rule);

}

},['elephant']);

Mar. 27, 2014 Update: The HP Switch Software OpenFlow Administrator's Guide K/KA/WB 15.14, Appendix B Implementation Notes, describes the effect of the probe interval on idle timeouts and describes how to change the default (using openflow hardware statistics refresh rate) but warns that shorter refresh rates will increase CPU load on the switch.The following command line arguments load the script and enable OpenFlow on startup:

-Dscript.file=ofmark.js \The additional highlighted argument instructs the sFlow-RT controller to install the wild card OpenFlow NORMAL rule automatically when the switch connects.

-Dopenflow.controller.start=yes \

-Dopenflow.controller.addNormal=yes

The screen capture at the top of the page shows a mixture of small flows "mice" and large flows "elephants" generated by a server connected to the HP 5406 zl switch. The graph at the bottom right shows the mixture of unmarked traffic being sent to the switch. The sFlow-RT controller receives a stream of sFlow measurements from the switch and detects each elephant flows in real-time, immediately installing an OpenFlow rule that matches the flow and instructing the switch to mark the flow by setting the IP type of service bits. The traffic upstream of the switch is shown in the top right chart and it can be clearly seen that each elephant flow has been identified and marked, while the mice have been left unmarked.

Note: While this demonstration only used a single switch, the solution easily scales to hundreds of switches and thousands of edge ports.The results from the HP switch are identical to those obtained with the Alcatel-Lucent switch, demonstrating the multi-vendor interoperability provided by the sFlow and OpenFlow standards. In addition, sFlow-RT's support for an open, standards based, programming environment (JavaScript / ECMAScript) makes it an ideal platform for rapidly developing and deploying traffic engineering SDN applications in existing networks.