The latest release of InMon's sFlow-RT controller adds integrated hybrid OpenFlow support - optimized for real-time traffic engineering applications that manage large traffic flows, including:

DDoS mitigation,

ECMP load balancing,

LAG load balancing,

large flow marking etc.

This article discusses the evolving architecture of software defined networking (SDN) and the role of analytics and traffic engineering. InMon's sFlow-RT controller is used to provide practical examples of the architecture.

The article,

Fabric: A Retrospective on Evolving SDN by Martin Casado, Teemu Koponen, Scott Shenker, and Amin Tootoonchian, makes the case for a two tier software defined networking (SDN) architecture; comprising a smart edge and an efficient core. The article,

Pragmatic software defined networking on this blog, examines how the edge is moving into virtual switches, with tunneling (VxLAN, NVGRE, GRE, STT) used to virtualize the network and decouple the edge from the core. As complex policy decisions move to the network edge, the core fabric is left with the task of efficiently managing physical resources in order to deliver low latency, high bandwidth connectivity between edge switches.

First generation SDN controllers were designed before the edge / core split became apparent and contain a complex set of features that limit their performance and scaleability. The sFlow-RT controller represents a second generation design, targeted specifically at the challenge of optimizing the performance of the physical network fabric.

Equal cost multi-path routing (ECMP), link aggregation (LAG) and multi-chassis link aggregation (MLAG) provide methods for spreading traffic across multiple paths in data center network fabrics in order to deliver the bandwidth needed to support big data and cloud workloads. However, these methods of load balancing do not handle all types of traffic well - in particular, long duration, high bandwidth "elephant" flows - see

Of Mice and Elephants by Martin Casado and Justin Pettit with input from Bruce Davie, Teemu Koponen, Brad Hedlund, Scott Lowe, and T. Sridhar. The article

SDN and large flows on this blog reviews traffic studies and discusses the benefits of actively managing large flows using an SDN controller.

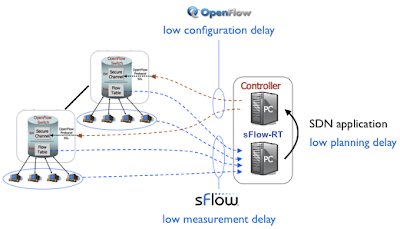

The sFlow-RT controller makes use of two critical technologies that allow it to actively manage large flows in large scale production networks:

- sFlow - The sFlow standard is widely supported by data center switch vendors and merchant silicon and provides the scaleability and rapid detection of large flows needed for effective real-time control.

- Integrated hybrid OpenFlow - With integrated hybrid OpenFlow, the normal forwarding mechanisms in switches are the default for handling traffic (ECMP, LAG, MLAG), i.e. packets are never sent to the controller to make forwarding decisions. OpenFlow is used to selectively override default forwarding in order to improve performance. This approach is extremely scaleable and robust - there is minimal overhead associated with maintaining the OpenFlow connections between the controller and the switches, and the network will still continue to forward traffic if the controller fails.

A demonstration using an older version of sFlow-RT with an embedded JavaScript DDoS mitigation application and an external OpenFlow controller (Open Daylight) won the SDN Idol competition at the 2014 Open Networking Summit - see

Real-time SDN Analytics for DDoS mitigation for a video of the live demonstration and details of the competition.

Much of the complexity in developing SDN control applications involves sharing and distributing state between the analytics engine, application, and OpenFlow controller. Modifying the DDoS mitigation scripts presented on this blog to use sFlow-RT's embedded OpenFlow controller is straightforward and delivers the performance, scaleability and robustness needed to move the DDoS mitigation applications into production.

However, in this article, the interesting problem of detecting and marking elephant flows described in

Of Mice and Elephants and

Marking large flows will be used to demonstrate the sFlow-RT controller. Before looking at how sFlow-RT handles large flow marking, it is worth looking at existing approaches. The article,

Elephant Flow Mitigation via Virtual-Physical Communication, on VMware's Network Virtualization blog describes how Open vSwitch was modified to include an "NSX Elephant agent" to detect large flows. When a large flow is detected, a notification is sent to the HP VAN SDN Controller that is running a special application that responds to the large flow notifications.

The industry’s first east-west federated solution on HP's Networking blog further describes the solution, providing a demonstration in which large flows are given a different priority marking to small flows.

In contrast, the following sFlow-RT controller JavaScript application implements large flow marking:

// Define large flow as greater than 100Mbits/sec for 0.2 seconds or longer

var bytes_per_second = 100000000/8;

var duration_seconds = 0.2;

var idx = 0;

setFlow('tcp',

{keys:'ipsource,ipdestination,tcpsourceport,tcpdestinationport',

value:'bytes', filter:'direction=ingress', t:duration_seconds}

);

setThreshold('elephant',

{metric:'tcp', value:bytes_per_second, byFlow:true, timeout:2,

filter:{ifspeed:[1000000000]}}

);

setEventHandler(function(evt) {

var agent = evt.agent;

var ports = ofInterfaceToPort(agent);

if(ports && ports.length == 1) {

var dpid = ports[0].dpid;

var id = "mark" + idx++;

var k = evt.flowKey.split(',');

var rule= {

priority:500, idleTimeout:2,

match:{dl_type:2048, nw_proto:6, nw_src:k[0], nw_dst:k[1],

tp_src:k[2], tp_dst:k[3]},

actions:["set_nw_tos=128","output=normal"]

};

setOfRule(dpid,id,rule);

}

},['elephant']);

The following command line arguments load the script and enable OpenFlow on startup:

-Dscript.file=ofmark.js -Dopenflow.controller.start=yes

Some notes on the script:

- The 100Mbits/s threshold for large flows was selected because it represents 10% of the bandwidth of the 1Gigabit access ports on the network

- The setFlow filter specifies ingress flows since the goal is to mark flows as they enter the network

- The setThreshold filter specifies that thresholds are only applied to 1Gigabit access ports

- The OpenFlow rule generated in setEventHandler exactly matches the addresses and ports in each TCP connection and includes an idleTimeout of 2 seconds. This means that OpenFlow rules are automatically removed by the switch when the flow becomes idle without any further intervention from the controller.

The iperf tool can be used to generate a sequence of large flows to test the controller:

while true; do iperf -c 10.100.10.152 -i 20 -t 20; sleep 20; done

The following screen capture shows a basic test setup and results:

The screen capture shows a mixture of small flows "mice" and large flows "elephants" generated by a server connected to an edge switch (in this case an Alcatel-Lucent OmniSwitch 6900). The graph at the bottom right shows the mixture of unmarked traffic being sent to the switch. The sFlow-RT controller receives a stream of sFlow measurements from the switch and detects each elephant flows in real-time, immediately installing an OpenFlow rule that matches the flow and instructing the switch to mark the flow by setting the IP type of service bits. The traffic upstream of the switch is shown in the top right chart and it can be clearly seen that each elephant flow has been identified and marked, while the mice have been left unmarked.

Note: While this demonstration only used a single switch, the solution easily scales to hundreds of switches and thousands of edge ports.

The sFlow-RT large flow marking application is a much simpler than the large flow marking solution demonstrated by HP and VWware - which requires special purpose instrumentation embedded in a customized virtual switch and complex inter-controller communication. In contrast, the sFlow-RT controller leverages standard sFlow instrumentation built into commodity network devices to detect large flows in real time.

Over 40 network vendors support the sFlow standard, including most of the

NSX network gateway services partners and the HP 5930AF used in the

HP / VMware demo. In addition, sFlow is widely available in virtual switches, including: Open vSwitch, Hyper-V Virtual Switch, IBM Distributed Virtual Switch 5000v, and HP FlexFabric Virtual Switch 5900v.

While it is possible to mark large flows based on a simple notification - as was demonstrated by HP/VMware - load balancing requires complete visibility into all links in the fabric. Using sFlow instrumentation embedded in the network devices, sFlow-RT has the real-time view of the utilization on all links and the information on all large flows and the their paths across the fabric needed for load balancing.

Why sFlow? What about other measurement protocols like Cisco NetFlow, IPFIX, SNMP, or using OpenFlow counters? The sFlow standard is uniquely suited to real-time traffic engineering because it provides the low latency, scaleability, and flexibility needed to support SDN traffic engineering applications. For a detailed discussion of the requirements for analytics driven control, see Performance Aware SDN.

Basing the sFlow-RT fabric controller on widely supported sFlow and OpenFlow standards and including an open, standards based, programming environment (JavaScript / ECMAScript) makes sFlow-RT an ideal platform for rapidly developing and deploying traffic engineering SDN applications in existing networks.