The Broadcom white paper,

Black Hole Detection by BroadView™ Instrumentation Software, describes the challenge of detecting and isolating packet loss caused by inconsistent routing in leaf-spine fabrics. The diagram from the paper provides an example, packets from host H11 to H22 are being forwarded by ToR1 via Spine1 to ToR2 even though the route to H22 has been withdrawn from ToR2. Since ToR2 doesn't have a route to the host, it sends the packet back up to Spine 2, which will send the packet back to ToR2, causing the packet to bounce back and forth until the IP time to live (TTL) expires.

The white paper discusses how Broadcom ASICs can be programmed to detect blackholes based on packet paths, i.e. packets arriving at a ToR switch from a Spine switch should never be forwarded to another Spine switch.

This article will discuss how the industry standard sFlow instrumentation (also included in Broadcom based switches) can be used to provide fabric wide detection of black holes.

The diagram shows a simple test network built using Cumulus VX virtual machines to emulate a four switch leaf-spine fabric like the one described in the Broadcom white paper (this network is described in

Open Virtual Network (OVN) and

Network virtualization visibility demo). The emulation of the control plane is extremely accurate since the same Cumulus Linux distribution that runs on physical switches is running in the Cumulus VX virtual machine. In this case BGP is being used as the routing protocol (see

BGP configuration made simple with Cumulus Linux).

The same open source

Host sFlow agent is running on the Linux servers and switches, streaming real-time telemetry over the out of band management network to sFlow analysis software running on the management server.

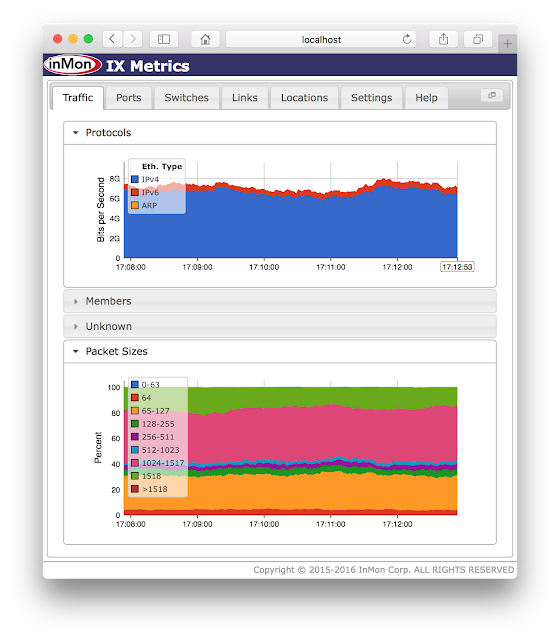

Fabric View is an open source application, running on the

sFlow-RT real-time analytics engine, designed to monitor the performance of leaf-spine fabrics. The

sFlow-RT Download page has instructions for downloading and installing sFlow-RT and Fabric View.

The Fabric View application needs two pieces of configuration information: the network topology and the address allocation.

Topology discovery with Cumulus Linux describes how to extract the topology in a Cumulus Linux network, yielding the following

topology.json file:

{

"links": {

"leaf2-spine2": {

"node1": "leaf2", "port1": "swp2",

"node2": "spine2", "port2": "swp2"

},

"leaf1-spine1": {

"node1": "leaf1", "port1": "swp1",

"node2": "spine1", "port2": "swp1"

},

"leaf1-spine2": {

"node1": "leaf1", "port1": "swp2",

"node2": "spine2", "port2": "swp1"

},

"leaf2-spine1": {

"node1": "leaf2", "port1": "swp1",

"node2": "spine1", "port2": "swp2"

}

}

}And the following

groups.json file lists the /24 address blocks allocated to hosts connected to each leaf switch:

{

"external":["0.0.0.0/0"],

"rack1":["192.168.1.0/24"],

"rack2":["192.168.2.0/24"]

}Defining Flows describes how sFlow-RT can be programmed to perform flow analytics. The following JavaScript file implements the blackhole detection and can be installed in the

sflow-rt/app/fabric-view/scripts/ directory:

// track flows that are sent back to spine

var pathfilt = 'node:inputifindex~leaf.*';

pathfilt += '&link:inputifindex!=null';

pathfilt += '&link:outputifindex!=null';

setFlow('fv-blackhole-path',

{keys:'group:ipdestination:fv',value:'frames', filter:pathfilt,

log:true, flowStart:true}

);

// track locally originating flows that have TTL indicating non shortest path

var diam = 2;

var ttlfilt = 'range:ipttl:0:'+(64-diam-2)+'=true';

ttlfilt += '&group:ipsource:fv!=external';

setFlow('fv-blackhole-ttl',

{keys:'group:ipdestination:fv,ipttl', value:'frames', filter:ttlfilt,

log:true, flowStart:true}

);

setFlowHandler(function(rec) {

var parts, msg = {'type':'blackhole'};

switch(rec.name) {

case 'fv-blackhole-path':

msg.rack = rec.flowKeys;

break;

case 'fv-blackhole-ttl':

var [rack,ttl] = rec.flowKeys.split(',');

msg.rack = rack;

msg.ttl = ttl;

break;

}

var port = topologyInterfaceToPort(rec.agent,rec.dataSource);

if(port && port.node) msg.node = port.node;

logWarning(JSON.stringify(msg));

},['fv-blackhole-path','fv-blackhole-ttl']);

Some notes on the script:

- The fv-blackhole-path flow definition has a filter that matches packets that arrive on an inter switch link and are sent back on another link (the rule described in the Broadcom paper.)

- The fv-blackhole-ttl script relies on the fact that the servers are running Linux which uses an initial TTL of 64. Since it only takes 3 routing hops to traverse the leaf-spine fabric, any TTL values of 60 or smaller are an indication of a potential routing loop and black hole.

- Flow records are generated as soon as a match is found and the setFlowHandler() function is uses to process the records, in this case logging warning messages.

Under normal operation no warning are generated. Adding static routes to

leaf2 and

spine1 to create a loop to blackhole packets results in the following output:

$ ./start.sh

2016-05-17T19:47:37-0700 INFO: Listening, sFlow port 9343

2016-05-17T19:47:38-0700 INFO: Starting the Jetty [HTTP/1.1] server on port 8008

2016-05-17T19:47:38-0700 INFO: Starting com.sflow.rt.rest.SFlowApplication application

2016-05-17T19:47:38-0700 INFO: Listening, http://localhost:8008

2016-05-17T19:47:38-0700 INFO: app/fabric-view/scripts/fabric-view-stats.js started

2016-05-17T19:47:38-0700 INFO: app/fabric-view/scripts/blackhole.js started

2016-05-17T19:47:38-0700 INFO: app/fabric-view/scripts/fabric-view.js started

2016-05-17T19:47:38-0700 INFO: app/fabric-view/scripts/fabric-view-elephants.js started

2016-05-17T19:47:38-0700 INFO: app/fabric-view/scripts/fabric-view-usr.js started

2016-05-17T20:50:33-0700 WARNING: {"type":"blackhole","rack":"rack2","ttl":"13","node":"leaf2"}

2016-05-17T20:50:48-0700 WARNING: {"type":"blackhole","rack":"rack2","node":"leaf2"}

Exporting events using syslog describes how to send events to SIEM tools like Logstash or Splunk so that they can be queried. The script can also be extended to perform further analysis, or to automatically apply remediation controls.

This example demonstrates the versatility of the sFlow architecture, shifting flow analytics from devices to external software makes it easy to deploy new capabilities. The real-time networking, server, and application analytics provided by sFlow-RT delivers actionable data through APIs and can easily be integrated with a wide variety of on-site and cloud, orchestration, DevOps and Software Defined Networking (SDN) tools.